AI Terminology Demystified: 50 Essential Terms Every Leader Should Know

Published by The Consultancy World | AI Strategy Experts | Last Updated: December 2025

The AI industry is rife with technical jargon that can confuse business leaders and hinder strategic decision-making. Terms like "neural networks," "transformers," "fine-tuning," and "hallucinations" are frequently used in vendor presentations, news articles, and board discussions - yet their meanings often remain unclear.

This comprehensive guide defines 50 essential AI terms that every business leader should understand. Rather than providing dry dictionary definitions, we explain each term in business context, clarifying why it matters for your organisation and how it impacts implementation decisions.

Understanding this vocabulary empowers you to evaluate vendor claims critically, ask informed questions, set realistic expectations and make confident strategic decisions about AI investments.

How to Use This Guide

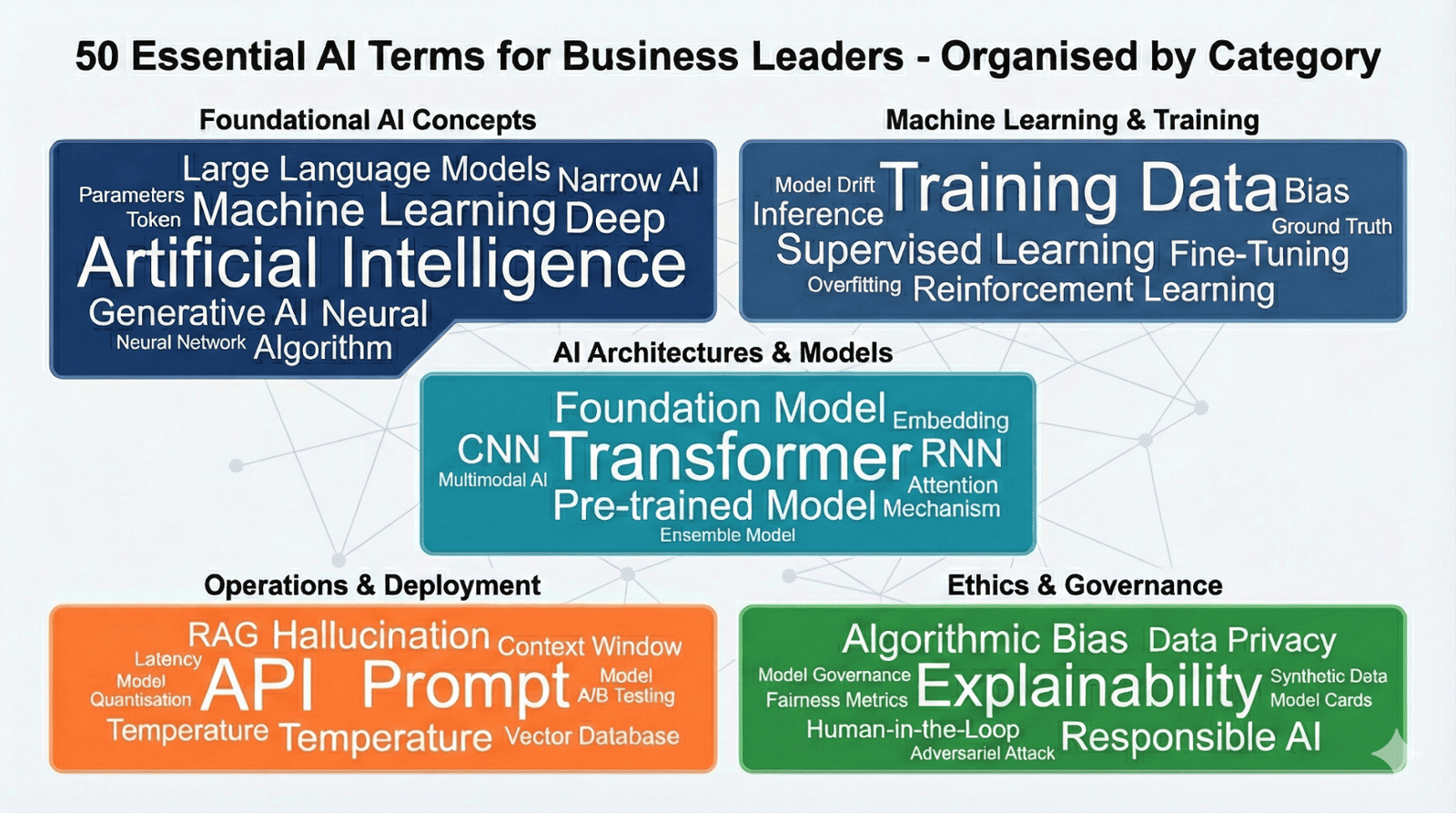

This glossary is organised into six thematic categories for easy reference:

1. Foundational AI Concepts (Terms 1-10)

2. Machine Learning and Training (Terms 11-20)

3. AI Architectures and Models (Terms 21-30)

4. AI Operations and Deployment (Terms 31-40)

5. AI Ethics and Governance (Terms 41-50)

Each term includes:

• Clear definition in plain business language

• Why it matters for business leaders

• Real-world example demonstrating the concept

• Related terms for deeper understanding

Category 1: Foundational AI Concepts

1. Artificial Intelligence (AI)

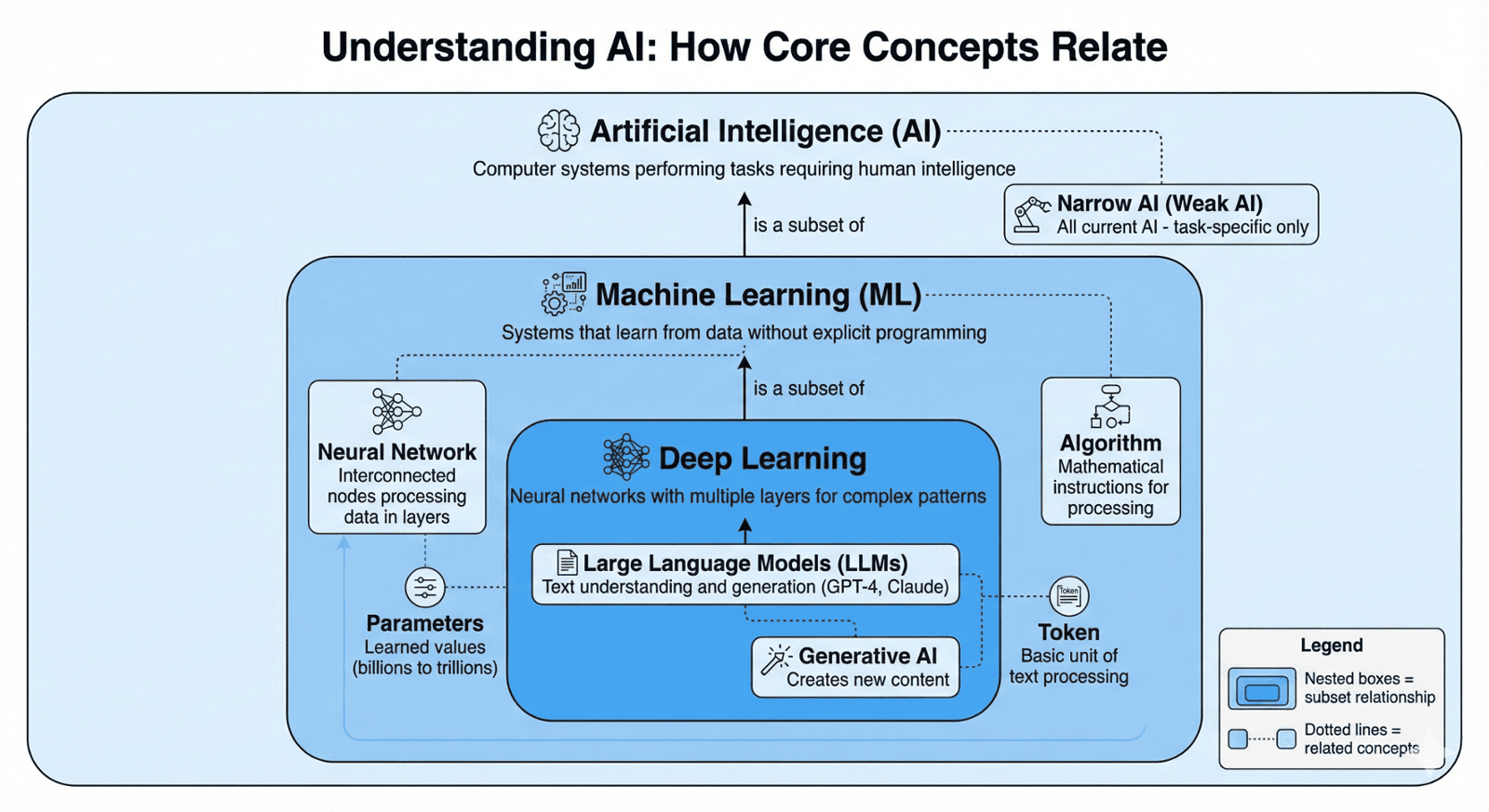

Definition: Computer systems designed to perform tasks that typically require human intelligence - learning from experience, recognising patterns, making decisions, understanding language.

Why It Matters: AI is the broadest term encompassing all intelligent systems. Understanding this helps you recognise when vendors are using "AI" as a marketing buzzword vs. describing genuine intelligent capabilities.

Example: A fraud detection system that learns to identify suspicious patterns in transaction data rather than following rigid rules.

Related Terms: Machine Learning, Deep Learning, Narrow AI

2. Machine Learning (ML)

Definition: A subset of AI where systems learn from data and improve their performance without being explicitly programmed for every scenario.

Why It Matters: Most modern AI business applications use ML. Understanding this distinction helps you recognise when you need actual learning systems vs. traditional rule-based software.

Example: An email spam filter that learns what constitutes spam from examples rather than following a list of spam words.

Related Terms: Supervised Learning, Unsupervised Learning, Training Data

3. Deep Learning

Definition: A specialised subset of Machine Learning using artificial neural networks with multiple layers to automatically discover complex patterns in data.

Why It Matters: Deep Learning powers advanced capabilities like image recognition and natural language processing. It requires significant data and computing resources - understanding this prevents unrealistic expectations.

Example: Tesla's autonomous driving system using deep learning to identify pedestrians, vehicles and road conditions from camera feeds.

Related Terms: Neural Networks, Convolutional Neural Networks, Transformers

4. Narrow AI (Weak AI)

Definition: AI systems designed to perform specific tasks exceptionally well but cannot transfer knowledge to other domains. All current AI is Narrow AI.

Why It Matters: Prevents unrealistic expectations. Your customer service chatbot won't suddenly be able to write marketing copy or forecast sales without separate training.

Example: AlphaGo defeats world champions at Go but cannot play chess, drive a car, or write emails.

Related Terms: Artificial General Intelligence (AGI), Domain-Specific AI

5. Generative AI

Definition: AI systems that create new content - text, images, code, audio, or video - rather than just analysing or classifying existing data.

Why It Matters: Represents the most accessible and immediately useful AI for business. Tools like ChatGPT and Midjourney are generative AI, enabling content creation at scale.

Example: ChatGPT writing product descriptions, Midjourney creating marketing images, GitHub Copilot generating code.

Related Terms: Large Language Models, Diffusion Models, Content Generation

6. Large Language Models (LLMs)

Definition: Generative AI systems trained on massive text datasets to understand and generate human-like text. Examples include GPT-4, Claude, and Gemini.

Why It Matters: LLMs power most modern business AI applications—chatbots, content generation, analysis, translation. Understanding their capabilities and limitations is crucial for realistic planning.

Example: GPT-4 powering ChatGPT, used by millions of businesses for customer service, content creation, and analysis.

Related Terms: Transformers, Pre-training, Fine-Tuning

7. Algorithm

Definition: A set of mathematical instructions that tells a computer how to process data and make decisions. The "recipe" that AI follows.

Why It Matters: Different algorithms have different strengths. When vendors discuss their "proprietary algorithms," you should understand they're talking about the mathematical approach to solving your problem.

Example: Netflix's recommendation algorithm analyses your viewing history to suggest shows you'll likely enjoy.

Related Terms: Neural Networks, Decision Trees, Regression

8. Neural Network

Definition: An AI architecture inspired by human brain structure, consisting of interconnected nodes (neurons) organized in layers that process and transform data.

Why It Matters: Neural networks are the foundation of modern AI capabilities. Understanding this helps you grasp why AI can handle complex, nuanced problems that simpler systems cannot.

Example: Image recognition systems using neural networks to identify objects in photos by processing visual information through multiple layers.

Related Terms: Deep Learning, Nodes, Weights, Layers

9. Parameters

Definition: The adjustable numerical values within an AI model that are learned during training. More parameters generally enable more sophisticated understanding.

Why It Matters: "Parameter count" is frequently cited in AI specifications. GPT-4 has an estimated 1.76 trillion parameters. More isn't always better, but it indicates model capacity.

Example: A smaller model with 7 billion parameters might handle simple tasks efficiently, whilst a 175-billion parameter model handles complex reasoning.

Related Terms: Model Size, Training, Fine-Tuning

10. Token

Definition: The basic unit of text processing in language models. Roughly equivalent to a word or word fragment (e.g., "ChatGPT" might be split into "Chat" and "GPT").

Why It Matters: AI services charge by tokens processed. Understanding tokens helps you estimate costs and understand context window limitations.

Example: The sentence "AI transforms business" is approximately 4-5 tokens. API pricing might be £0.01 per 1,000 tokens.

Related Terms: Context Window, API Pricing, Input/Output

Category 2: Machine Learning and Training

11. Training Data

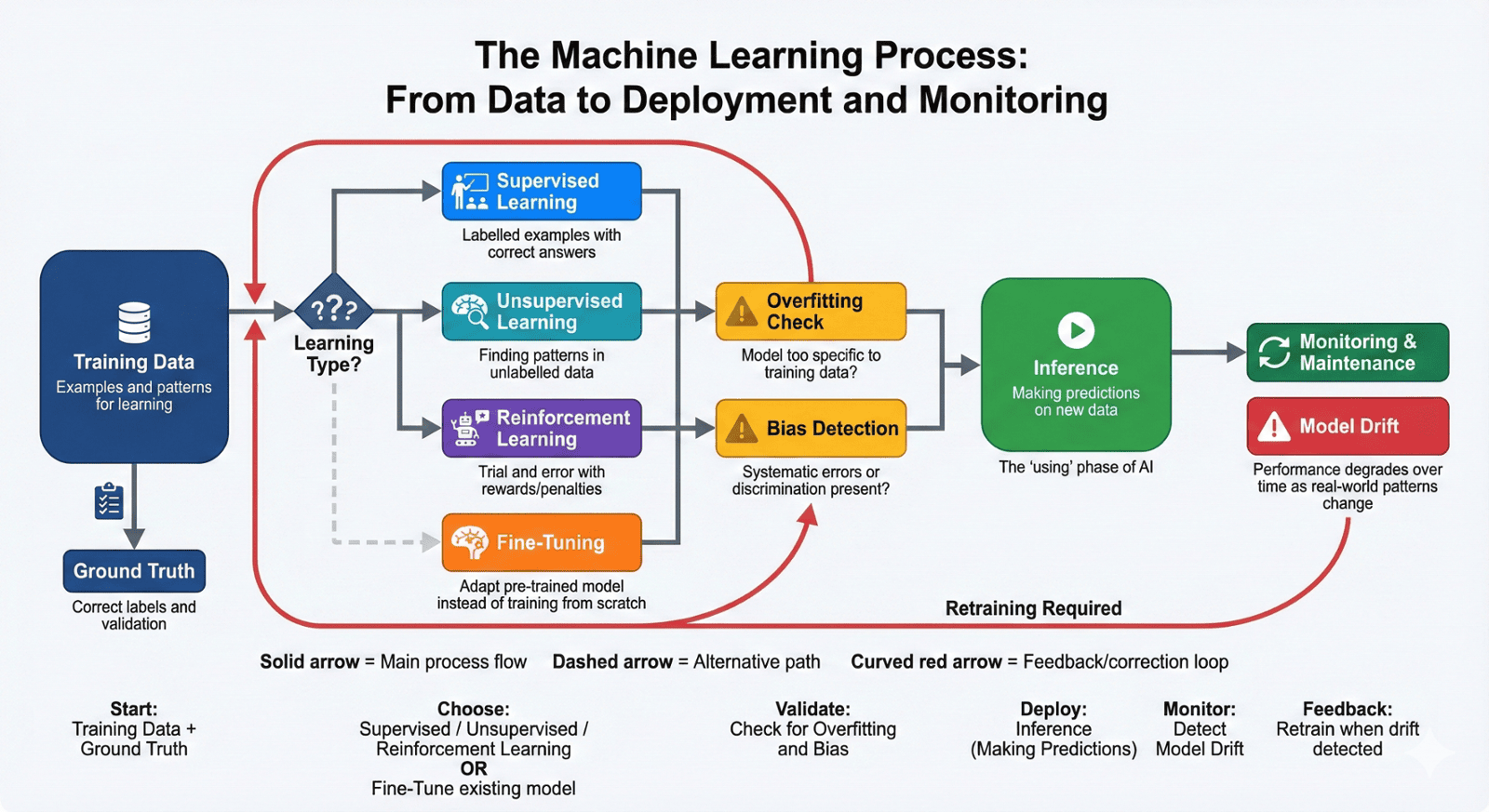

Definition: The examples, information, and patterns that an AI system learns from during development. Quality and quantity of training data directly impact AI performance.

Why It Matters: "Garbage in, garbage out" applies doubly to AI. Poor training data creates poor AI systems. You'll need quality data for custom ML projects.

Example: To train a customer churn prediction model, you need historical data showing which customers left and which stayed, along with their behaviour patterns.

Related Terms: Labelled Data, Dataset, Data Quality

12. Supervised Learning

Definition: Machine learning where the AI learns from labelled examples - data where the correct answer is provided during training.

Why It Matters: Most business AI applications use supervised learning. Understanding this helps you recognise when you'll need expensive data labelling efforts.

Example: Training an email spam filter by showing it thousands of emails labelled "spam" or "not spam."

Related Terms: Labelled Data, Classification, Regression

13. Unsupervised Learning

Definition: Machine learning where the AI finds patterns in unlabelled data without being told what to look for.

Why It Matters: Useful when you have data but don't know what patterns exist. Common for customer segmentation and anomaly detection.

Example: Analysing customer purchase behaviour to automatically identify different customer segments without predefined categories.

Related Terms: Clustering, Anomaly Detection, Pattern Recognition

14. Reinforcement Learning

Definition: Machine learning where an AI learns through trial and error, receiving rewards for desired outcomes and penalties for undesired ones.

Why It Matters: Excellent for optimization problems like pricing, inventory, or resource allocation. More complex to implement than supervised learning.

Example: Dynamic pricing systems that learn optimal price points by testing different prices and observing sales results.

Related Terms: Reward Function, Agent, Environment

15. Fine-Tuning

Definition: Adapting a pre-trained AI model to your specific use case by training it on your data and requirements, rather than training from scratch.

Why It Matters: Dramatically reduces costs and time for custom AI. Instead of spending £500K training a model from scratch, spend £25K fine-tuning an existing model.

Example: Taking GPT-4 and fine-tuning it with your company's documentation and communication style to create a branded chatbot.

Related Terms: Transfer Learning, Pre-trained Models, Custom Models

16. Inference

Definition: The process of an AI model making predictions or generating outputs on new data after training is complete. This is the "using" phase vs. the "learning" phase.

Why It Matters: Inference costs money and requires computing resources. Understanding this helps with operational budgeting and performance planning.

Example: After training a customer churn model, running it weekly on current customer data to identify at-risk customers is inference.

Related Terms: Prediction, Deployment, Real-Time Inference

17. Model Drift

Definition: When an AI model's performance degrades over time because real-world data patterns have changed since training.

Why It Matters: AI systems require ongoing monitoring and retraining. Understanding drift prevents surprise when your once-accurate system becomes unreliable.

Example: A COVID-trained demand forecasting model performing poorly post-pandemic because customer behaviour changed.

Related Terms: Retraining, Performance Monitoring, Model Maintenance

18. Overfitting

Definition: When an AI model learns the training data too specifically, including noise and quirks, resulting in poor performance on new data.

Why It Matters: A common problem in custom AI projects. Overfitted models look great in testing but fail in production.

Example: A fraud detection system trained on 2023 data that only catches 2023 fraud patterns, missing new fraud techniques in 2024.

Related Terms: Generalisation, Training/Validation Split, Regularisation

19. Bias (in AI)

Definition: Systematic errors in AI outputs caused by biased training data or algorithms, often reflecting societal prejudices present in the data.

Why It Matters: Biased AI can lead to discrimination, legal liability, and reputational damage. Critical consideration for hiring, lending, or customer-facing AI.

Example: A recruitment AI trained on historical hiring data might discriminate against women if past hiring was male-dominated.

Related Terms: Fairness, Ethics, Algorithmic Discrimination

20. Ground Truth

Definition: The actual, correct answer or label for training data - the objective reality the AI is trying to learn.

Why It Matters: Establishing accurate ground truth is expensive and crucial. Incorrect ground truth creates fundamentally flawed AI systems.

Example: For medical imaging AI, ground truth is the correct diagnosis confirmed by expert pathologists - not just initial impressions.

Related Terms: Labelled Data, Accuracy, Validation

Category 3: AI Architectures and Models

21. Transformer

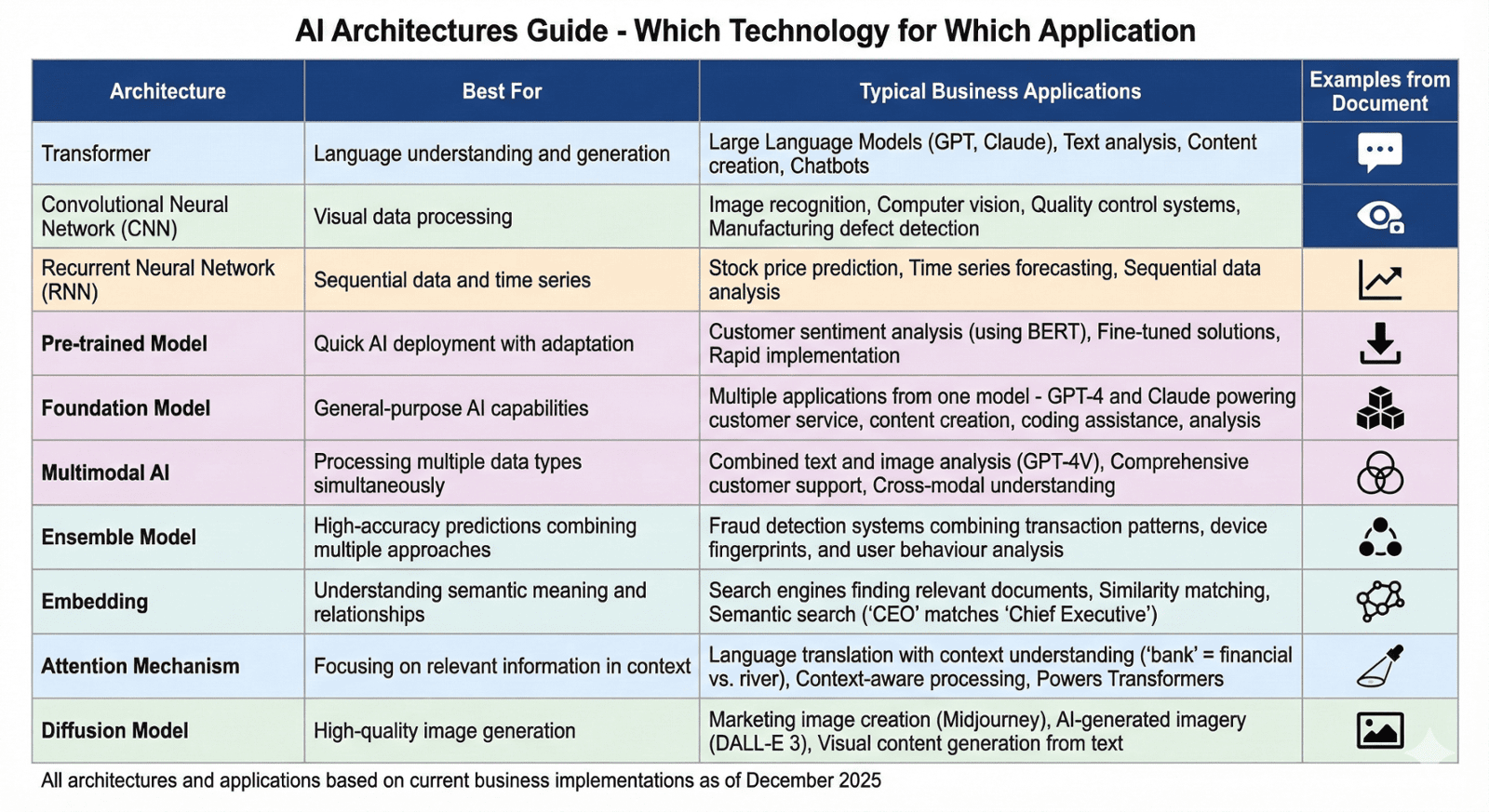

Definition: A neural network architecture using "attention mechanisms" to process entire sequences of data simultaneously. Foundation of modern language models like GPT and Claude.

Why It Matters: Transformers revolutionised AI capabilities. When vendors mention "transformer-based" models, they're using state-of-the-art architecture.

Example: GPT (Generative Pre-trained Transformer) uses transformer architecture to understand and generate human-like text.

Related Terms: Attention Mechanism, Large Language Models, BERT

22. Convolutional Neural Network (CNN)

Definition: A neural network architecture specialized for processing visual data, using filters to detect features regardless of their position in an image.

Why It Matters: CNNs power image recognition, computer vision, and visual quality control systems. Essential for any AI involving images or video.

Example: Quality control systems in manufacturing using CNNs to detect product defects from camera images.

Related Terms: Computer Vision, Image Recognition, Feature Extraction

23. Recurrent Neural Network (RNN)

Definition: A neural network architecture designed for sequential data, maintaining memory of previous inputs to understand context and sequences.

Why It Matters: Useful for time series data, though largely superseded by transformers for language tasks. Important for understanding older AI systems.

Example: Stock price prediction systems analysing time series of historical prices to forecast future movements.

Related Terms: LSTM, Time Series, Sequential Data

24. Pre-trained Model

Definition: An AI model that has already been trained on massive datasets and can be used directly or fine-tuned for specific tasks.

Why It Matters: Pre-trained models democratise AI access. You don't need millions of pounds to develop AI - you can leverage pre-trained models.

Example: Using BERT (pre-trained on billions of web pages) for your customer sentiment analysis by fine-tuning it with your customer feedback data.

Related Terms: Transfer Learning, Fine-Tuning, Foundation Models

25. Foundation Model

Definition: Large-scale AI models trained on broad data that can be adapted to many different tasks. LLMs like GPT-4 and Claude are foundation models.

Why It Matters: Foundation models provide general-purpose AI capabilities. Understanding this helps you recognize opportunities to leverage existing models vs. building custom.

Example: GPT-4 serving as a foundation model for thousands of applications - customer service, content creation, coding assistance, analysis.

Related Terms: Pre-trained Model, Large Language Model, Multi-Task Learning

26. Multimodal AI

Definition: AI systems that can process and understand multiple types of data - text, images, audio, video - simultaneously.

Why It Matters: Represents the cutting edge of AI capabilities. Enables more sophisticated applications combining different information types.

Example: GPT-4V analysing both product images and text descriptions to provide comprehensive customer support responses.

Related Terms: Vision-Language Models, Audio Processing, Cross-Modal Learning

27. Ensemble Model

Definition: Combining multiple AI models to make predictions, typically achieving better performance than any single model.

Why It Matters: Many production AI systems use ensembles for improved accuracy and reliability. Understanding this explains why some solutions are more complex.

Example: A fraud detection system combining three different models - one analysing transaction patterns, one checking device fingerprints, one assessing user behaviour - and making decisions based on majority vote.

Related Terms: Model Combination, Stacking, Bagging

28. Embedding

Definition: Mathematical representations of data (words, images, etc.) as numerical vectors that capture semantic meaning and relationships.

Why It Matters: Embeddings enable AI to understand that "CEO" and "Chief Executive" are similar, or that a picture of a cat is similar to other cat pictures.

Example: Search engines using embeddings to find documents relevant to your query even if they don't contain exact keywords.

Related Terms: Vector Representation, Semantic Similarity, Vector Database

29. Attention Mechanism

Definition: A technique that allows AI models to focus on the most relevant parts of input data when making predictions, mimicking human selective attention.

Why It Matters: Attention mechanisms enable AI to understand context and relationships, powering breakthrough capabilities in language and vision tasks.

Example: When translating "bank" in a sentence, attention helps the model focus on surrounding words to determine if it means "financial institution" or "river bank."

Related Terms: Transformer, Self-Attention, Context Understanding

30. Diffusion Model

Definition: A generative AI approach that creates images by gradually removing noise from random patterns, learning to generate realistic images.

Why It Matters: Diffusion models power leading image generation tools like Midjourney and DALL-E 3, enabling high-quality AI-generated imagery for business use.

Example: Midjourney using diffusion models to generate marketing images from text descriptions.

Related Terms: Image Generation, Generative AI, Stable Diffusion

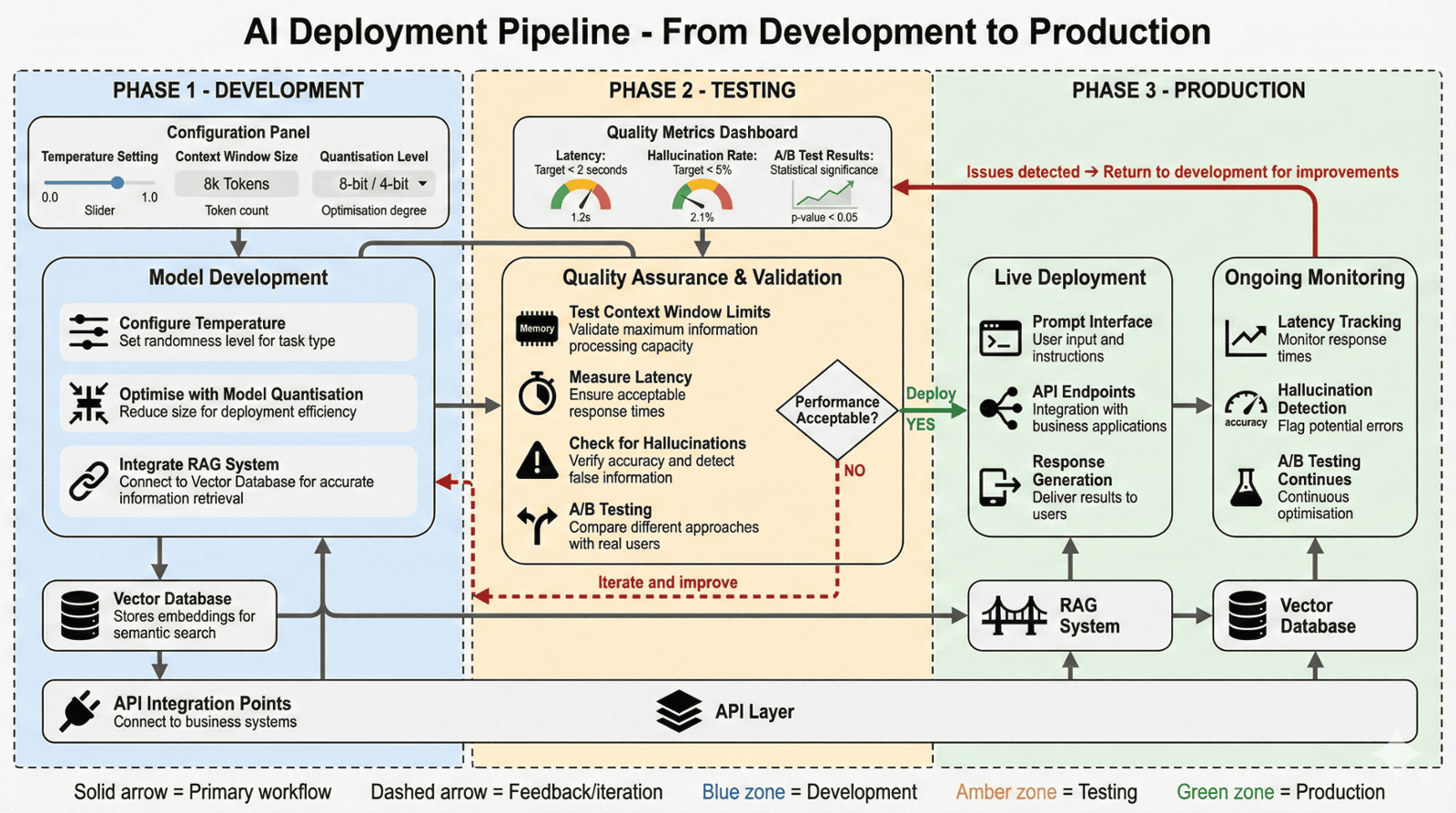

Category 4: AI Operations and Deployment

31. API (Application Programming Interface)

Definition: A way for different software systems to communicate and share data. AI services typically provide APIs allowing integration with your applications.

Why It Matters: APIs are how you connect AI capabilities to your business systems. Understanding APIs helps you assess integration complexity and costs.

Example: Connecting ChatGPT to your customer service platform via OpenAI's API to power an intelligent chatbot.

Related Terms: Integration, REST API, Endpoint

32. Prompt

Definition: The input or instruction given to a generative AI system to produce desired output. Effective prompting significantly impacts result quality.

Why It Matters: Prompt quality determines output quality. Understanding prompt engineering helps you get better results from AI tools.

Example: "Write a professional email to a client" (vague prompt) vs. "Write a 150-word professional email to an existing B2B client explaining a 2-week project delay, maintaining positive tone and offering solutions" (effective prompt).

Related Terms: Prompt Engineering, Instructions, Input

33. Context Window

Definition: The maximum amount of information (measured in tokens) that an AI model can process at once - its "working memory."

Why It Matters: Context window limits determine how much information an AI can consider. Larger windows enable more sophisticated tasks but cost more.

Example: GPT-4 with 128,000 token context window can analyse entire business reports, whilst smaller models might only handle a few pages.

Related Terms: Token Limit, Input Length, Memory

34. Latency

Definition: The time delay between submitting a request to an AI system and receiving a response. Critical for real-time applications.

Why It Matters: High latency frustrates users and limits applications. Customer service chatbots need low latency; weekly reports can tolerate higher latency.

Example: Real-time fraud detection requiring sub-second responses vs. nightly batch processing of sales forecasts.

Related Terms: Response Time, Performance, Real-Time Inference

35. Hallucination

Definition: When generative AI produces false or nonsensical information presented with apparent confidence. A significant limitation of current LLMs.

Why It Matters: Hallucinations create business risk - false statistics, fabricated references, incorrect advice. Requires human verification for critical applications.

Example: ChatGPT inventing realistic-sounding but entirely false statistics about market trends when asked for specific data.

Related Terms: Confabulation, Factual Errors, Accuracy Limitations

36. Temperature

Definition: A parameter controlling randomness in AI text generation. Lower temperature produces focused, consistent outputs; higher temperature produces creative, varied outputs.

Why It Matters: Adjusting temperature optimises AI for different tasks - low for consistent customer service responses, high for creative brainstorming.

Example: Setting temperature to 0.2 for generating standardised contract clauses vs. 0.9 for creative marketing taglines.

Related Terms: Sampling, Creativity, Deterministic Output

37. Retrieval-Augmented Generation (RAG)

Definition: Enhancing AI outputs by retrieving relevant information from external sources (databases, documents) before generating responses.

Why It Matters: RAG dramatically reduces hallucinations and enables AI to access current, company-specific information. Essential for enterprise AI applications.

Example: A customer service chatbot using RAG to retrieve accurate product specifications from your database before answering technical questions.

Related Terms: Knowledge Base, Grounding, Accuracy Enhancement

38. Vector Database

Definition: Specialised databases that store embeddings and enable fast similarity searching - finding related items based on semantic meaning, not just keywords.

Why It Matters: Vector databases power modern search, recommendation, and RAG systems. Essential infrastructure for advanced AI applications.

Example: E-commerce product search using vector databases to find "comfortable walking shoes" even when product descriptions don't use those exact words.

Related Terms: Embeddings, Semantic Search, Similarity Matching

39. Model Quantisation

Definition: Reducing the precision of an AI model's parameters to decrease size and computational requirements whilst maintaining acceptable performance.

Why It Matters: Quantisation enables running large models on less powerful hardware, reducing costs and enabling edge deployment.

Example: Deploying a quantised version of an image recognition model on mobile devices instead of requiring cloud processing.

Related Terms: Model Compression, Optimisation, Edge AI

40. A/B Testing

Definition: Comparing two AI approaches by randomly assigning users to different versions and measuring performance differences.

Why It Matters: A/B testing provides empirical evidence of what works. Essential for optimising AI systems and proving ROI.

Example: Testing two different chatbot personalities to see which achieves higher customer satisfaction scores.

Related Terms: Experimental Design, Performance Measurement, Optimisation

Speak the Language of AI with Confidence

Understanding AI terminology is just the beginning. The real challenge is applying this knowledge to make strategic decisions for your business.

The Consultancy World helps business leaders translate technical AI concepts into practical business strategies.

We speak both languages - technical AI and business strategy - ensuring you can:

✓ Evaluate vendor claims critically

✓ Ask the right questions during AI procurement

✓ Set realistic expectations for AI initiatives

✓ Make informed decisions about AI investments

In a complimentary consultation, we'll:

• Discuss your specific AI opportunities in plain language

• Explain relevant technical concepts for your situation

• Help you ask vendors the right questions

Category 5: AI Ethics and Governance

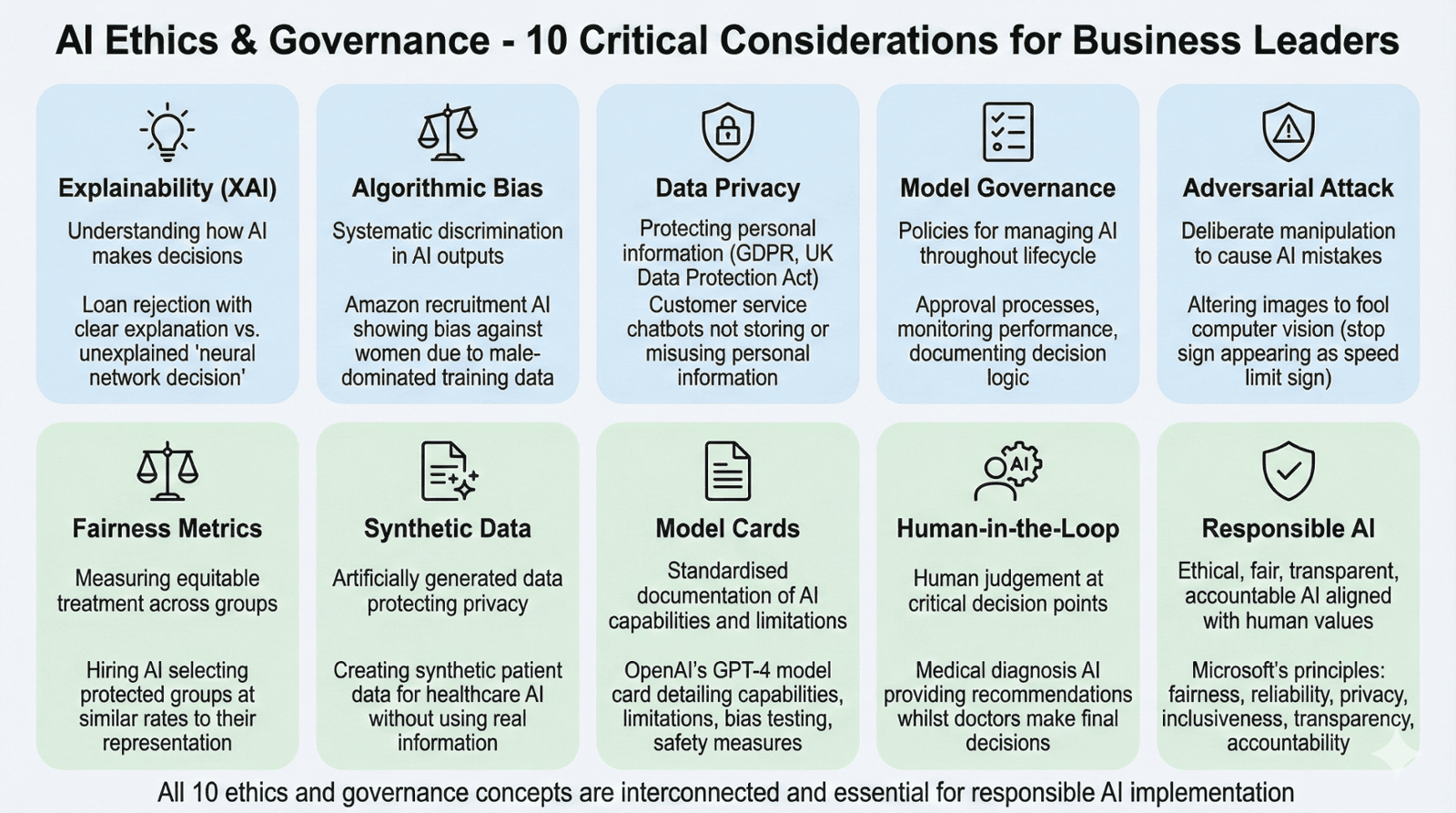

41. Explainability (XAI)

Definition: The ability to understand and explain how an AI system makes decisions. Also called "Explainable AI" or "Interpretable AI."

Why It Matters: Critical for regulated industries, high-stakes decisions, and building user trust. Some AI methods are more explainable than others.

Example: A loan rejection system explaining "credit score below threshold" vs. "neural network decision" without further explanation.

Related Terms: Interpretability, Transparency, Black Box

42. Algorithmic Bias

Definition: Systematic and unfair discrimination in AI outputs caused by biased training data, flawed algorithms, or problematic design choices.

Why It Matters: Legal liability, ethical concerns, and reputational damage. Essential consideration for AI in hiring, lending, criminal justice, or customer-facing roles.

Example: Amazon's experimental recruitment AI showing bias against women because it was trained on historical hiring data from a male-dominated company.

Related Terms: Fairness, Discrimination, Bias Mitigation

43. Data Privacy

Definition: Protecting personal and sensitive information used by or processed through AI systems, complying with regulations like GDPR and UK Data Protection Act.

Why It Matters: Legal requirements, customer trust, and ethical responsibility. AI systems often process sensitive data, raising privacy concerns.

Example: Ensuring customer service chatbots don't store or misuse personal customer information shared during conversations.

Related Terms: GDPR, Confidentiality, Data Protection

44. Model Governance

Definition: Policies, processes, and controls for managing AI models throughout their lifecycle - development, deployment, monitoring, and retirement.

Why It Matters: Reduces risk, ensures compliance, maintains quality, and provides accountability for AI systems in production.

Example: Establishing approval processes for deploying new AI models, monitoring performance, and documenting decision logic.

Related Terms: AI Governance, Model Registry, Compliance

45. Adversarial Attack

Definition: Deliberately manipulating inputs to cause AI systems to make mistakes or produce unintended outputs.

Why It Matters: Security concern for AI systems, especially in fraud detection, authentication, or safety-critical applications.

Example: Subtly altering images to fool computer vision systems - making a stop sign appear as a speed limit sign to autonomous vehicles.

Related Terms: Security, Robustness, Attack Surface

46. Fairness Metrics

Definition: Quantitative measures used to assess whether an AI system treats different groups equitably, identifying potential discrimination.

Why It Matters: Essential for evaluating AI systems in sensitive applications. Multiple fairness definitions exist, sometimes conflicting.

Example: Measuring whether a hiring AI selects candidates from protected groups at similar rates to their representation in qualified applicants.

Related Terms: Bias Testing, Equity, Discrimination Assessment

47. Synthetic Data

Definition: Artificially generated data that mimics real data characteristics but doesn't contain actual sensitive information.

Why It Matters: Enables AI development and testing whilst protecting privacy. Growing importance as privacy regulations tighten.

Example: Creating synthetic patient data for healthcare AI training without using real patient information.

Related Terms: Data Augmentation, Privacy Protection, Training Data

48. Model Cards

Definition: Standardized documentation describing an AI model's capabilities, limitations, training data, performance metrics, and appropriate use cases.

Why It Matters: Promotes transparency, helps users understand when and how to use AI responsibly, and supports compliance.

Example: OpenAI publishing model cards for GPT-4 detailing its capabilities, limitations, bias testing results, and safety measures.

Related Terms: Documentation, Transparency, Responsible AI

49. Human-in-the-Loop

Definition: AI systems that incorporate human judgment at critical points, rather than operating fully autonomously.

Why It Matters: Reduces risk, improves quality, and maintains accountability for important decisions. Recommended for high-stakes AI applications.

Example: Medical diagnosis AI providing recommendations to doctors who make final decisions, rather than autonomous diagnosis.

Related Terms: Human Oversight, Hybrid Intelligence, Safety Measures

50. Responsible AI

Definition: Developing and deploying AI systems that are ethical, fair, transparent, accountable, and aligned with human values and societal benefit.

Why It Matters: Long-term business sustainability, regulatory compliance, customer trust, and moral responsibility. Increasingly important as AI becomes pervasive.

Example: Microsoft's responsible AI principles guiding development of Azure AI services - fairness, reliability, privacy, inclusiveness, transparency, and accountability.

Related Terms: AI Ethics, Trustworthy AI, AI Governance

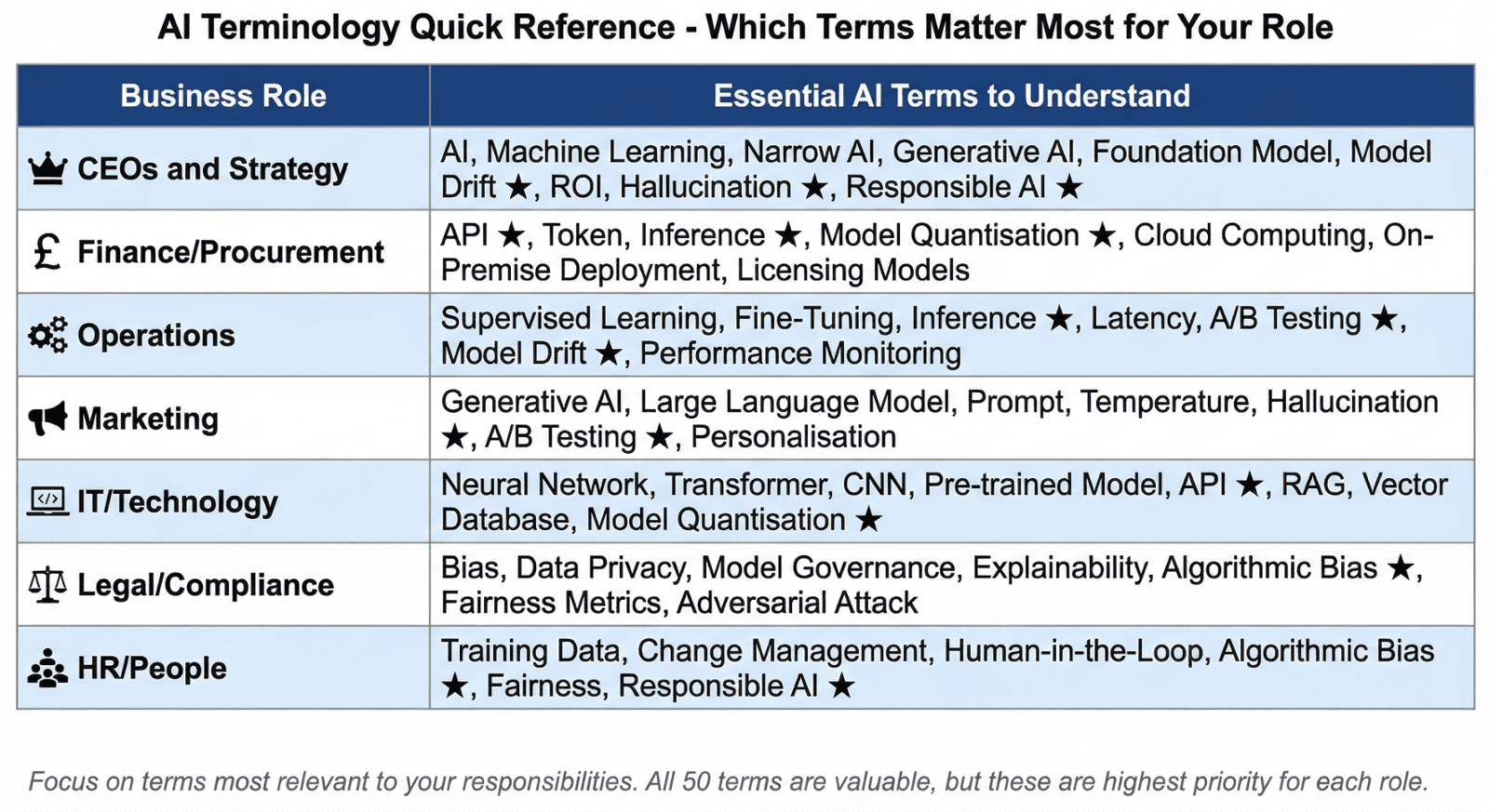

Quick Reference: Terms by Business Function

For CEOs and Strategy:

AI, Machine Learning, Narrow AI, Generative AI, Foundation Model, Model Drift, ROI, Hallucination, Responsible AI

For Finance/Procurement:

API, Token, Inference, Model Quantisation, Cloud Computing, On-Premise Deployment, Licensing Models

For Operations:

Supervised Learning, Fine-Tuning, Inference, Latency, A/B Testing, Model Drift, Performance Monitoring

For Marketing:

Generative AI, Large Language Model, Prompt, Temperature, Hallucination, A/B Testing, Personalisation

For IT/Technology:

Neural Network, Transformer, CNN, Pre-trained Model, API, RAG, Vector Database, Model Quantisation

For Legal/Compliance:

Bias, Data Privacy, Model Governance, Explainability, Algorithmic Bias, Fairness Metrics, Adversarial Attack

For HR/People:

Training Data, Change Management, Human-in-the-Loop, Algorithmic Bias, Fairness, Responsible AI

Conclusion: Knowledge Empowers Decision-Making

Understanding AI terminology transforms you from a passive audience for technical jargon into an informed decision-maker who can evaluate claims, ask probing questions, and make confident strategic choices.

Key Takeaways:

1. AI vocabulary isn't impenetrable. Most concepts have straightforward business explanations once jargon is removed.

2. Different stakeholders need different terms. Focus on vocabulary relevant to your role and responsibilities.

3. Understanding terminology helps you assess vendor claims. You can now recognise when vendors are overpromising or using buzzwords without substance.

4. This knowledge improves communication. You can now effectively discuss AI with technical teams, vendors and board members.

5. Terminology evolves rapidly. New terms emerge as AI advances. Commit to continuous learning.

Your next step is applying this knowledge to strategic AI decisions for your organisation.

About The Consultancy World

The Consultancy World provides vendor-agnostic AI strategy consulting, translating complex technical concepts into clear business language and actionable recommendations.

We help business leaders navigate the AI landscape without getting lost in jargon or falling for vendor hype.

Based in West Sussex, UK | Serving Clients Globally

Need help translating AI opportunities into a business strategy?

Further Reading from The Consultancy World Learning Library

Continue Your AI Education:

• What Is Artificial Intelligence? A Business Leader's Guide

• Machine Learning vs AI vs Deep Learning: Understanding the Differences

• How AI Actually Learns: Training Data, Models and Algorithms Simplified

• Common AI Myths That Are Holding Your Business Back

This glossary was compiled by The Consultancy World's expert team and reflects current AI terminology as of December 2025. AI language evolves rapidly; check our learning library for updates.

Last Updated: December 18, 2025

Reading Time: 28-32 minutes

Level: Reference / All Levels

Audience: All Business Leaders, Technical and Non-Technical

© 2025 The Consultancy for Business Solutions Ltd. All rights reserved. E&OE